According to TechnologyReview, when the robot decides to take a specific route to the warehouse, or the driverless car decides to turn left or right, what is the decision of their artificial intelligence (AI) algorithm? Now, AI can't explain to people the reasons for making a decision. This may be a big problem that needs to be clarified.

In 2016, a strange driverless car appeared on a quiet road in Monmouth County, New Jersey, USA. This is a test car developed by chipmaker NVIDIA researchers, although it looks no different from Google, Tesla and General Motors unmanned cars, but it shows more power of AI.

Helping cars automate their driving is an impressive feat, but it also makes people feel a bit uneasy, because now we are not very clear about how cars make decisions. The information collected by the car sensors is passed directly to the vast artificial neural network, which processes the data and then issues commands to direct the car's steering wheel, brakes, and other systems.

On the surface, it seems to match the response of the human driver. But when it happens, such as hitting a tree or a red light, we may have a hard time figuring out why. These AI algorithms are so complex that even engineers who design them can do nothing. Now we have no way to design such a system: it always explains why people want to make the above decision.

The "mystery consciousness" of these driverless cars is pointing to an imminent issue related to AI. These automotive algorithms are based on AI technology (also known as deep learning), which has proven to be a powerful tool for solving many problems in recent years. This technology is widely used in the fields of image subtitles, speech recognition, and language translation. Now, the same technology is expected to help diagnose deadly diseases, make multi-million dollar trading decisions, and countless other things that can change the industry.

But until we find new ways to make technologies such as deep learning more understandable by their creators and easier to explain to users about their behavior, the above scenarios will appear or should appear. It is difficult to predict when they will fail, and failure will be inevitable. This is one of the reasons why NVIDIA driverless cars are still in beta.

Currently, mathematical models are being used to help determine who should get parole, who should get a loan, and who should be hired. If you have access to these digital models, you are likely to understand their reasoning process. But banks, the military, employers, and others are now turning their attention to more complex machine learning, which can help auto-decision become more ridiculous, and deep learning can fundamentally change the way computers are programmed. Tommi Jaakkola, a professor of machine learning at the Massachusetts Institute of Technology, said: "This issue is not only relevant to the present, but also to many issues of the future. Whether it is investment decisions, medical decisions or military decisions, we are all You can't simply rely on this 'black box'."

It has been suggested that the AI ​​system will be asked how to draw conclusions or make decisions as a basic legal right. Beginning in the summer of 2018, the EU may require companies to provide users with reasons for making decisions about their automation systems. This seems impossible, even for systems that are relatively simple on the surface, such as apps and websites that use deep learning service ads or recommended songs. Computers running these services are already self-programming, and they are working in ways we can't understand, even if the engineers who develop them can't clearly explain their behavior.

This leads to many incredible problems. As technology advances, we may soon cross some thresholds to help AI leap. Although we humans are not always able to explain our thinking process, we can find ways to trust and judge someone through intuition. Does the machine have human-like thinking? Previously, we never developed a machine where creators couldn't understand how it works. How do we get along with these unpredictable, incomprehensible smart machines? These questions prompted me to embark on the journey of decrypting AI algorithms, from Apple to Google to many other places, including even meeting one of the greatest philosophers of our time.

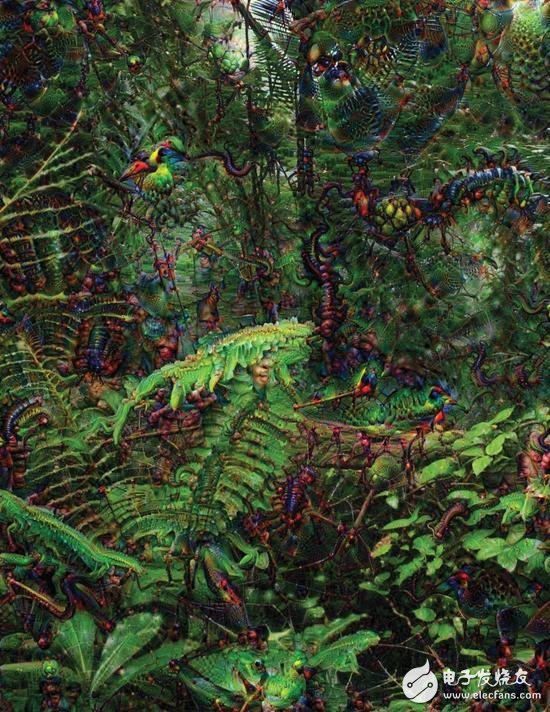

Figure: Artist Adam Ferriss created this map using Google's Deep Dream program, which can adjust images by stimulating the pattern recognition capabilities of deep neural networks. This picture was created using the middle layer of the neural network.

In 2015, the research team at Mount Sinai Hospital in New York was inspired to apply deep learning to the vast case database in the hospital. This data set has hundreds of variables that are relevant to the patient, including test results and doctoral diagnoses. The resulting program was named Deep PaTIent by the researchers and was trained using data from more than 700,000 patients. But when testing new cases, it shows incredible abilities - very good at predicting disease. Without expert guidance, Deep PaTIent can identify hidden patterns in hospital data and identify diseases, including liver cancer, through various symptoms of the patient. Joel Dudley, project leader at the Mount Sinai Hospital team, said: "Using case data, many methods can predict disease, but our approach is better."

At the same time, Deep PaTIent is also a bit confusing, and it is very accurate for diagnosing mental illnesses such as schizophrenia. But as we all know, even doctors can hardly diagnose schizophrenia. For this reason Dudley wants to know why Deep PaTIent has such ability, but he can't find the answer. This new tool does not provide any clues. If a tool like Deep Patient can really help a doctor, ideally it should provide predictive reasoning to ensure the accuracy of its conclusions. But Dudley said: "Although we can build models, we really don't know how they make decisions."

AI is not always the case. From the beginning, there were two schools that disagreed on how to understand or explain AI. Many people think that machines developed according to rules and logic make the most sense because their internal operations are transparent and anyone can check their code. Others believe that if machines are inspired by biology and learn through observation and experience, they are more likely to be intelligent. This means that the computer has programming capabilities. They no longer need the program to enter instructions to solve the problem, the program itself can generate algorithms based on the sample data and the expected output. According to the latter model, this machine learning technique evolved into the most powerful AI system today, and the machine itself is the program.

Initially, this method was very limited in practical use. From the 1960s to the 1970s, it was still largely limited to the “edge of the fieldâ€. Subsequently, the emergence of computerization and big data sets in many industries rekindled interest. This encourages the birth of more powerful machine learning techniques, especially the latest technology known as artificial neural networks. By the 1990s, neural networks had been able to automatically digitize handwritten content.

But until the beginning of 2010, after several ingenious adjustments and improvements, the larger or deeper neural network has made great progress in automatic perception. Deep learning is the main driving force behind the explosive growth of today's AI. It gives computers extraordinary capabilities, such as the ability to recognize spoken words like humans, instead of the ability to manually enter complex code into machines. Deep learning has changed computer vision and drastically improved machine translation. It is now being used to guide key decisions in areas such as healthcare, finance, and manufacturing.

The operation of any machine learning technology is inherently opaque compared to manual coding systems, even for computer scientists. This is not to say that all AI technologies are equally unpredictable in the future, but in essence, deep learning is a particularly black "black box." You can't see how deep the neural network looks inside. Network reasoning is actually the common behavior of thousands of analog neurons, arranged in dozens or even hundreds of intricate interconnect layers. Each neuron in the first layer receives the input, just like the pixel intensity on the image, then performs the operation and outputs a new signal. These outputs go into a more complex network, the next layer of neurons. This layer is passed until the final output is produced. In addition, there is a process called “backpropagation†that allows the network to understand the “expected output†that needs to be generated by adjusting the calculation of individual neurons.

Figure: Image created by artist Adam Ferriss using the Google Deep Dream program

The multi-layered structure of the deep network allows it to identify things on different levels of abstraction, for example, systems designed to recognize dogs, lower levels that recognize simple things like colors or outlines, and higher levels. It can identify more complicated things, such as fur or eyes, and the top layer will determine that the object is a dog. The same method can be applied to other input aspects that allow the machine to self-learn, including the pronunciation of the words used in the presentation, the letters and words that form the sentence in the text, or the steering wheel action required for driving.

In order to capture and explain in more detail what happened in these systems, the researchers used many clever strategies. In 2015, Google researchers revised the image recognition algorithm based on deep learning development, which does not need to find the target in the image, but generate targets or modify them. By effectively running the algorithm in reverse, they found that this algorithm can be used to identify birds or buildings.

The image produced by the program called Deep Dream shows that the animals that look very weird appear from the clouds or plants, such as the pagodas in the illusion appearing in the forest or mountains. These images prove that deep learning is not completely incomprehensible, and algorithms also require familiar visual features such as cockles or feathers. But these pictures also show that deep learning is very different from human perception, and what makes us ignore is incredible. Google researchers have noticed that when the algorithm generates a dumbbell image, it also generates human arms holding it. The machine concluded that the arm is part of the dumbbell.

Using technology from the fields of neuroscience and cognitive science, this technology has made greater progress. A team led by Jeff Clune, an associate professor at the University of Wyoming in the United States, has tested deep neural networks using optical illusion AI. In 2015, Kluane's team showed how specific images deceive neural networks, making them mistakenly believe that the target does not exist because the image takes advantage of the low-level mode of system search. Kluen's colleague Jason Yosinski also developed a probe-like tool that targets neurons in the middle of the network to find the images that are most easily activated. Although the image appears in an abstract way, it highlights the mysterious nature of machine perception.

However, we don't just have to peek into AI's thinking, nor do we have a simple solution. The interaction of internal computations in deep neural networks is critical for high-level pattern recognition and complex decision making, but these calculations are a quagmire of mathematical functions and variables. Yakla said: "If you have a small neural network, you may understand it. But when it becomes very large, each layer will have thousands of units, and there are hundreds of layers, then it will become quite Hard to understand."

Yakala's colleague Regina Barzilay focuses on applying machine learning to the medical field. At the age of 43 years ago, Balzilai was diagnosed with breast cancer. The diagnosis itself is shocking, but Barzile is also frustrated because cutting-edge statistics and machine learning methods have not been used to help oncology research or to guide treatment. She said that AI is likely to revolutionize the medical industry and realize that this potential means that it can be used not only in cases. She wants to use more raw data that is underutilized, such as image data, pathology data, and so on.

After ending cancer treatment last year, Barzile and students began working with doctors at the Massachusetts General Hospital to develop a system that identifies patients by analyzing pathology reports, which are special clinical cases that researchers may want to study. However, Balzilai knows that this system needs to be able to explain its reasoning. To this end, Barzile and Jakarta have added new research that extracts and highlights fragments of text that are also in the patterns that have been discovered. Barzile et al. also developed a deep learning algorithm that found early signs of breast cancer in mammograms. Their goal is to give the same ability to explain reasoning in this system. Barzile said: "You really need a circuit through which machines and humans can enhance collaboration."

The US military is investing billions of dollars in projects that can use machine learning to guide tanks and aircraft, identify targets, and help analysts filter large amounts of intelligence. Unlike other areas of research, the US Department of Defense has determined that interpretability is the key stumbling block to unlocking the mystery of the AI ​​algorithm. David Gunning, head of the DARPA program at the Department of Defense's R&D organization, oversees the project called Explainable Artificial Intelligence, which he helped to oversee the DARPA project that ultimately led to the birth of Siri.

Gan Ning said that automation is infiltrating into countless military fields. Intelligence analysts are testing machine learning as a new way to confirm patterns in massive intelligence data. Many unmanned ground vehicles and aircraft are being developed and tested, but in robot tanks that cannot be self-explanatory, soldiers may not feel uncomfortable, and analysts are reluctant to act on information without reasoning support. Gan Ning said: "These machine learning systems often generate a lot of false alarms in nature, so network analysts need extra help to understand why they give such advice."

In March of this year, DARPA selected 13 projects from academia and industry to receive funding from the Gan Ning team, including a project led by University of Washington professor Carlos Guestrin. Gastlin and colleagues have found a new way for machine learning systems to provide reasoning explanations for their output. In essence, according to their method, computers can automatically find examples from the data set and support them. For example, systems that can classify terrorist e-mail messages may require tens of millions of pieces of information for training and decision making. But using the University of Washington team's approach, it highlights the specific keywords that appear in the message. Gastlin's team also designed an image recognition system that provides reasoning support by highlighting the most important parts of the image.

One disadvantage of this and other similar techniques is that the explanations they provide are always simplified, meaning that many important information may be lost. Gastlin said: "We have not yet realized the whole dream. In the future, AI can talk to you and explain it. We still have a long way to go to create a truly interpretable AI."

Understanding AI's reasoning is not only important in high-risk areas such as cancer diagnosis or military exercises. When this technology is popularized as an important component of everyday life, it is equally important that AI can give explanations. Tom Gruber, head of Apple's Siri team, said that interpretability is a key factor for his team because they are trying to make Siri a smarter and more capable virtual assistant. Gruber didn't discuss Siri's future plans, but it's easy to think that if you receive a restaurant recommendation from Siri, you might want to know why it is recommended. Ruslan Salakhutdinov, director of research at Apple's AI and associate professor at Carnegie Mellon University, has interpretability as the core of the evolving relationship between humans and intelligent machines.

Just as many human behaviors are unexplained, perhaps AI can't explain everything it does. Kluane said: "Even if someone can give you a plausible explanation, it may not be enough, the same is true for AI. This may be the essential part of intelligence, only some of the behavior can be explained by reason. Some behaviors are only out of Instinct, or subconscious, or no reason at all." If this is the case, then at some stage, we may have to absolutely believe in the judgment of AI, or not use it at all. Again, this judgment must be incorporated into social intelligence. Just as society is built on the contract of expected behavior, we need to design an AI system that adheres to and adapts to our social rules. If we want to make robotic tanks and other killing machines, their decisions also need to meet our ethical standards.

To explore these abstract concepts, I visited Daniel Dennett, a famous philosopher and cognitive scientist at Tufts University. In his latest book, From Bacteria to Bach and Back, Dennet said that the essential part of the evolution of intelligence itself is to create systems that can perform tasks that the creators of the system do not know how to perform. Dennett said: "The question is, what kind of efforts we must make to do this. What are the criteria we set for them, our own standards?"

Daniel also warned about the search for interpretable AI. He said: "I think if we want to use these things and rely on them, then we need to grasp as much as possible how and why they give us such an answer. "But since there is no perfect answer, we should be cautious about the interpretability of AI, no matter how smart the machine becomes. Dennett said: "If they can't give an explanation better than us, then we should not believe them."

45x45mm Low Profile White Faceplate

included or excluded keystones for Keystones, or designed with PC board

fixtures and fittings provided

for Solid Cat 5 or Cat 6 cable

size is 45x45mm basing on Frenc type standard

Material as ABS, PC, PBT in UL94V-0 standards

Operation temperture -40~70 ℃

Could accept any combination of UONICRE Keystone Jack or RJ45 connectors

France Face plate,brush faceplate,France type face plate,network wall plate

NINGBO UONICORE ELECTRONICS CO., LTD , https://www.uonicore.com