When it comes to AR and its applications, one of the first things that comes to mind is the heads-up display (HUD). They are used in aerospace and automotive applications, allowing users to see relevant aircraft/car information without looking down at the dashboard.

Studies have shown that when humans interact with the world through vision, images are processed many times faster than other forms of information such as written text. Augmented Reality (AR) is similar to its close relatives of virtual reality (VR), allowing users to enhance their insight into the surrounding environment. The main difference between them is that AR enriches or enhances nature with virtual objects such as text or other visual objects. This allows users of the AR system to interact with their environment safely and efficiently. This is different from virtual reality where users are immersed in artificially created environments. The combination of augmented reality and virtuality is often described as presenting mixed reality (MR) to the user. Many of us have unknowingly used AR in our daily lives, such as when our mobile devices are navigating roads or when playing virtual games such as Pokémon GO.

Virtual reality, augmented reality and mixed reality

When it comes to AR and its applications, one of the first things that comes to mind is the heads-up display (HUD). They are used in aerospace and automotive applications, allowing users to see relevant aircraft/car information without looking down at the dashboard. The heads up display is one of the simpler available AR applications. Advanced AR applications with more advanced features such as wearable technology are often referred to as smart augmented reality, and according to TracTIca, its market size will reach $2.3 billion by 2020.

Augmented reality applications and use cases

AR is entering a wide range of applications, covering industries, military, manufacturing, medical, social and commercial industries, and its numerous use cases drive its widespread adoption. In the business world, AR focuses primarily on social media-providing applications, such as being able to identify the people you are talking to and adding resume information. AR also allows consumers to see products that are sometimes difficult to access, such as cars, yachts, buildings, and more.

Many AR applications are inseparable from the use of smart glasses worn by detectives. These smart glasses can increase efficiency in the manufacturing environment, such as facilitating the replacement of operating manuals and showing the user how to assemble parts. In the medical field, smart glasses facilitate the sharing of medical records and details of trauma and injury, providing treatment information to first responders and subsequent emergency room personnel.

*Examples of the use of smart glasses in industrial environments*

A typical example is a large parcel logistics company. The company is currently using AR smart glasses to read the barcode on the shipping label. After the barcode is scanned, the smart glasses can communicate with the company server using the WiFi infrastructure to determine the final destination of the package. After the destination is known, the smart glasses can prompt the user for the stack of packages to continue shipping.

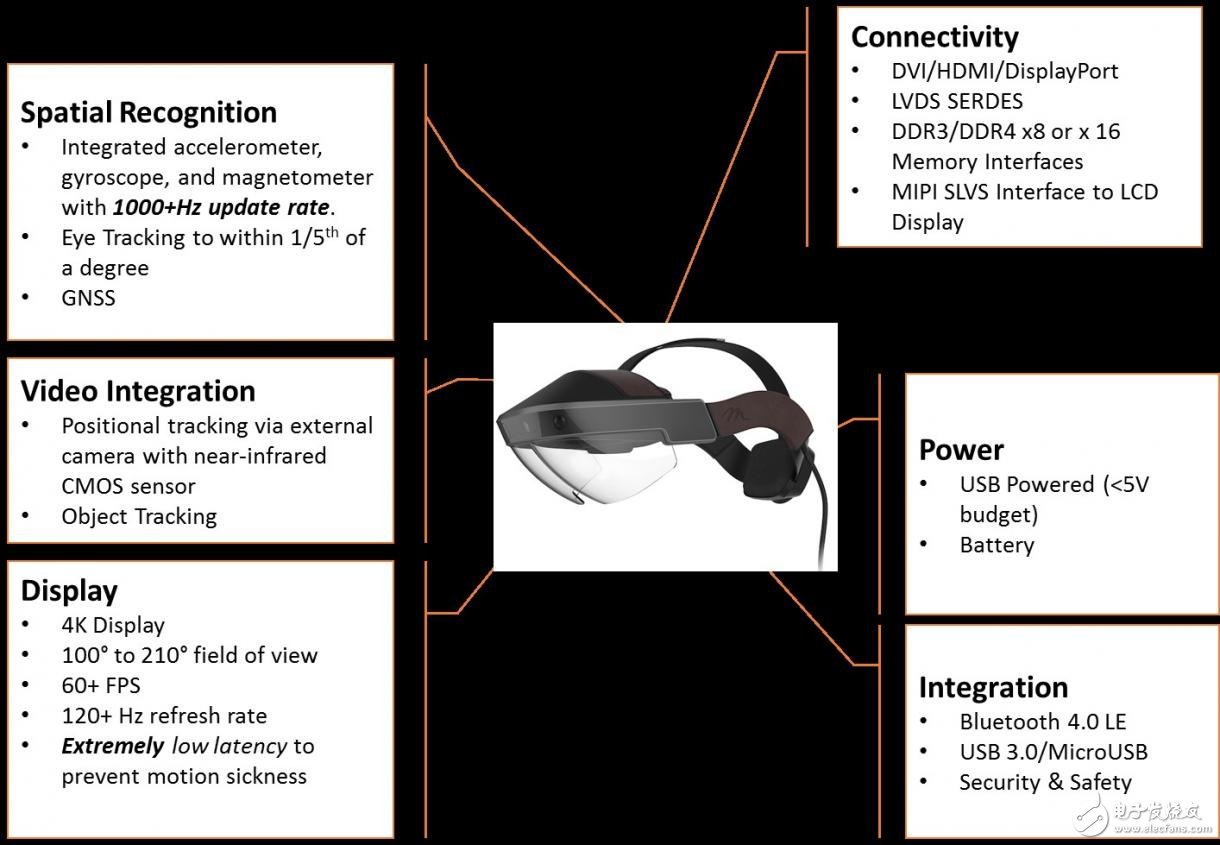

Even without considering applications and use cases, designing an AR system faces multiple conflicting requirements, including performance, security, power consumption, and future compatibility. If the designer wants to provide the ideal solution for the AR system, these must be considered.

Implement AR system

These complex AR systems require the ability to connect multiple camera sensors and process data from these sensors to let the system know about the surrounding environment. These camera sensors may also operate in different frequency bands of the electromagnetic spectrum, such as infrared or near infrared. In addition, these sensors may provide information from outside the electromagnetic spectrum to provide input for detecting movement and rotation, such as MEMS accelerometers and gyroscopes, as well as position information provided by Global Navigation Satellite Systems (GNSS). Embedded vision systems that fuse information from many different types of sensors are also commonly referred to as heterogeneous sensor fusion systems. AR systems also require high frame rates and the ability to perform real-time analysis, frame-by-frame extraction and processing of the information contained in each frame. Providing processing power to meet these requirements is a decisive factor in component selection.

Anatomy of the AR system

The All Programmable Zynq -7000 SoC or Zynq UltraScale+ MPSoC is used to implement the processing core of the AR system. These devices are inherently heterogeneous processing systems that combine ARM processors with high-performance programmable logic. The Zynq UltraScale+ MPSoC is part of the next-generation Zynq-7000 SoC with an additional ARM Mali-400 GPU. Some members of the series also include hardened video encoders that support the H.265 and HVEC standards.

These devices enable designers to ideally subdivide system architectures using processors to enable real-time analytics and pass on to traditional processor tasks in the ecosystem. This programmable logic can be used to implement sensor interface and processing, resulting in multiple benefits, including:

• N image processing pipelines are implemented in parallel according to application requirements.

• Arbitrary connectivity to define and connect any sensor, communication protocol or display standard, providing flexibility and future upgrade paths

To implement image processing pipeline and sensor fusion algorithms, we can take advantage of the high-level synthesis capabilities available in tools such as Vivado HLS and SDSoC. These tools have a variety of expert-level libraries including OpenCV support. To reduce the time-to-market of AR systems, a wide range of third-party IPs can also be utilized. These IPs are developed for AR, embedded systems and specialized Xilinx technologies. Xylon is included among the suppliers of these IP modules. Xylon offers the LogiBRICKS family of IP cores that can be quickly integrated into the Vivado design environment, while providing drag-and-drop functionality for quick system uptime and operation. Another IP module supplier is Omnitek, which offers a range of IP modules for key aspects of AR requirements, such as real-time folding modules and 3D processing modules.

Designers must also consider the unique aspects of the AR system. Not only do they need to be connected to the camera and sensor that observes the user's surroundings, but they also need to execute the algorithms needed for the application and use case. At the same time they must be able to track the user's eyes and determine their line of sight to determine where they are looking. This is typically done by adding a camera that looks at the user's face and implementing an eye tracking algorithm. Once implemented, the algorithm enables the AR system to track the user's line of sight and determine what to send to the AR display to efficiently utilize bandwidth and meet processing requirements. But performing detection and tracking itself is a highly computationally intensive task.

Most AR systems are portable, tethered, and many times are wearable systems like smart glasses. Thus, if such a processing function is implemented in a power-limited environment, it will face a unique problem. Both the Zynq SoC and Zynq UltraScale+ MPSoC family of devices deliver the best performance per unit of power, further reducing operating power by enabling multiple options. Under extreme conditions, these processors can enter a standby mode that can be woken up by any source, shutting down the programmable logic that occupies half of the device's resources. These options are implemented once the AR system detects that it is idle, extending battery life. During the operation of the AR system, currently unused processor units can be clocked to reduce power consumption. In the programmable logic unit, extremely high power efficiency can also be achieved by following simple design rules such as efficient use of hard macros, careful planning of control signals, and consideration of intelligent clock gating in areas of the device that are not currently needed.

There are several AR applications, such as patient medical record sharing or production data sharing, which require a high level of security in the areas of Information Assurance (IA) and Threat Prevention (TP), especially in AR systems that are highly mobile and may be misplaced. The case of the place. Information assurance requires us to rely on information stored in the system and information sent and received by the system. For the integrated human intelligence field, we need to use Zynq's secure boot feature to implement encryption and use AES decryption, HMAC and RSA authentication for authentication. As long as the device is properly configured and running, developers can use the ARM Trust Zone and hypervisor to implement an orthogonal environment that is inaccessible to outsiders.

For threat prevention, these devices can use the system's built-in XADC to monitor supply voltage, current, and temperature to detect any attempt to tamper with the AR system. If this happens, the Zynq device offers a variety of options, including recording the attempt, erasing the security data, and preventing the AR system from reconnecting to the supporting infrastructure.

in conclusion

The application of the AR system in several major industries, such as commercial, industrial, and military, is becoming increasingly popular. These devices also bring them a series of paradoxical challenges such as high performance, system level security and energy efficiency. Using Zynq SoC or Zynq UltraScale+ MPSoC as the core of your processing system will solve these challenges.

We engineer loudspeaker solutions that offer great durability, quality sound, and peak performance. When an electrical signal is applied to the voice coil it generates a magnetic field. The voice coil and magnets within the Speaker interact causing the coil and attached cone to move, generating sound. Our loudspeakers incorporate large magnets and particular voice coils to handle a broad range of frequencies and sounds. Additionally, our loudspeakers can be configured in multiple cone materials, sizes, shapes and gaskets to adapt to different operational environments. We have provided loudspeaker solutions for both indoor and outdoor applications. Our loudspeaker designs can be found in home theater systems and on the handlebars of some of the most exquisite motorcycles.

Lond Speaker,Lead Wire Speaker,Waterproof Loudspeaker,Micro Waterproof Speaker

Jiangsu Huawha Electronices Co.,Ltd , https://www.hnbuzzer.com