How to implement convolutional neural networks based on encrypted data for training and prediction

Morten Dahl, a Ph.D. in Datacho Machine Learning Engineering from Aarhus University, outlines the process of implementing a convolutional neural network (CNN) that operates on encrypted data for both training and prediction.

TL;DR: We adapted a classic CNN model to train and make predictions using encrypted data.

Convolutional Neural Networks (CNNs) have gained immense popularity in recent years due to their superior performance in image-related tasks. One notable application is the detection of skin cancer, where users can quickly take a photo of a skin lesion and receive an analysis through a mobile app. Access to extensive clinical datasets is crucial for model training, yet these datasets are often sensitive.

This raises privacy concerns and highlights the need for secure multi-party computation (MPC). How many applications are limited by the lack of accessible data? If allowing app users to contribute data could improve the model, how many would be willing to share personal health information?

Using MPC can mitigate the risk of exposing personal data, thereby increasing user participation. By training on encrypted data, we not only protect personal information but also prevent the leakage of learned model parameters. Other technologies like differential privacy can also help, but they are not discussed here.

This article explores a simplified image analysis case and introduces the necessary techniques. There are notebooks available on GitHub (mortendahl/privateml), with the main notebook providing a proof of concept implementation. Additionally, I presented this work at the Paris Machine Learning meetup, and the slides are available in the repository under ParisML17.pdf.

Many thanks to Andrew Trask, Nigel Smart, Adrià Gascón, and the OpenMined community for their inspiration and discussions on this topic.

Setup

We assume the training dataset is shared among input providers, and two servers perform the computations. These servers are trusted not to cooperate outside the protocol's scope. For example, one server might be a virtual instance managed by different organizations in a shared cloud environment.

Input providers only need to transfer their encrypted training data initially. After that, all calculations occur between the two servers, making it feasible for input providers to use devices like mobile phones. Once trained, the model remains encrypted, allowing secure predictions.

For technical reasons, a separate crypto producer generates the raw materials used in the calculation process for efficiency. While it's possible to eliminate this entity, this article does not cover those details.

In terms of security, we aim for "honest-but-curious" security, assuming the servers follow the protocol but may try to learn as much as possible. Although weaker than fully malicious security, this still provides strong protection against any server behavior post-calculation. Note that some privacy might be slightly compromised during the training process.

Image Analysis Based on CNN

Our use case is the classic MNIST handwritten digit recognition task, where the model learns to identify Arabic numerals in images. We base our model on the Keras example.

Feature_layers = [

Conv2D(32, (3, 3), padding='same', input_shape=(28, 28, 1)),

Activation('relu'),

Conv2D(32, (3, 3), padding='same'),

Activation('relu'),

MaxPooling2D(pool_size=(2,2)),

Dropout (.25),

Flatten()

]

Classification_layers = [

Dense (128),

Activation('relu'),

Dropout (.50),

Dense (NUM_CLASSES),

Activation('softmax')

]

Model = Sequential(feature_layers + classification_layers)

Model.compile(

Loss='categorical_crossentropy',

Optimizer='adam',

Metrics=['accuracy'])

Model.fit(

X_train, y_train,

Epochs=1,

Batch_size=32,

Verbose=1,

Validation_data=(x_test, y_test))

The details of this model are not discussed here, as there are already many resources online that explain them. However, the basic idea is to pass the image through a set of feature layers, transforming the original pixels into more relevant abstract properties for classification. These are then combined by classification layers to generate a probability distribution of possible numbers. The final output is usually the number with the highest probability.

As we'll see shortly, the advantage of using Keras is that we can quickly experiment on unencrypted data and observe how the model behaves. At the same time, Keras offers a simple interface to implement encryption settings.

Based on SPDZ Secure Computing

After the CNN is ready, let's look at the MPC. We will use the current state-of-the-art SPDZ protocol because it allows us to use only two servers and move specific calculations to the offline phase to improve online performance.

Like other typical secure computing protocols, all calculations are performed in a domain represented by a prime number Q. This means that the floating-point numbers used by the CNN must be encoded as integers modulo Q, which imposes restrictions on Q and affects performance.

In interactive computing such as the SPDZ protocol, communication and round complexity are also considered. Communication complexity measures the number of bytes sent over the network, a relatively slow process. Round complexity measures the number of synchronization points between two servers, which may block one server while waiting for the other. Both factors significantly impact the total execution time.

More importantly, the "native" operations of these protocols are only addition and multiplication. Division, comparison, etc., can be done but are more expensive in terms of complexity. We will discuss how to alleviate some of these issues and first focus on the basic SPDZ protocol.

Tensor Operation

The following code implements the PublicTensor and PrivateTensor classes for the SPDZ protocol, representing the two servers' knowledge of the tensor.

classPrivateTensor:

Def __init__(self, values, shares0=None, shares1=None):

If not values is None:

Shares0, shares1 = share(values)

Self.shares0 = shares0

Self.shares1 = shares1

Def reconstruct(self):

return PublicTensor(reconstruct(self.shares0, self.shares1))

Def add(x, y):

If type(y) is PublicTensor:

Shares0 = (x.values + y.shares0) % Q

Shares1 = y.shares1

return PrivateTensor(None, shares0, shares1)

If type(y) is PrivateTensor:

Shares0 = (x.shares0 + y.shares0) % Q

Shares1 = (x.shares1 + y.shares1) % Q

return PrivateTensor(None, shares0, shares1)

Def mul(x, y):

If type(y) is PublicTensor:

Shares0 = (x.shares0 * y.values) % Q

Shares1 = (x.shares1 * y.values) % Q

return PrivateTensor(None, shares0, shares1)

If type(y) is PrivateTensor:

a, b, a_mul_b = generate_mul_triple(x.shape, y.shape)

Alpha = (x - a).reconstruct()

Beta = (y - b).reconstruct()

Return alpha.mul(beta) + \

Alpha.mul(b) + \

A.mul(beta) + \

A_mul_b

The code is straightforward. Of course, there are some technical details, which are covered in the attached notebook.

The basic tool functions used in the above code:

Def share(secrets):

Shares0 = sample_random_tensor(secrets.shape)

Shares1 = (secrets - shares0) % Q

Return shares0, shares1

Def reconstruct(shares0, shares1):

Secrets = (shares0 + shares1) % Q

Return secrets

Def generate_mul_triple(x_shape, y_shape):

a = sample_random_tensor(x_shape)

b = sample_random_tensor(y_shape)

c = np.multiply(a, b) % Q

return PrivateTensor(a), PrivateTensor(b), PrivateTensor(c)

Adaptation Model

While it is possible in principle to safely calculate any function based on our existing model, what needs to be done in practice is to consider model variants that are more friendly to MPC and encryption protocols. In a slightly more vivid way, we often need to open two black boxes to better fit the two technologies.

The root cause of this approach is that some operations are surprisingly expensive under cryptographic operations. As we mentioned before, addition and multiplication are relatively cheap, while comparisons and divisions based on private denominators are not. For this reason, we made some changes to the model to avoid this problem.

Many of the changes involved in this section and their corresponding performance can be found in the accompanying Python notebook.

Optimizer

The first is the optimizer: although many implementations have chosen Adam for its efficiency, Adam involves taking the square root of the private value and using the private value as the denominator in the division. Although it is possible to theoretically perform these calculations safely, it can be a significant bottleneck in performance in practice, so you need to avoid using Adam.

A simple remedy is to use the momentum SGD (momentum SGD) optimizer, which may mean longer training time, but only a simple operation.

Model.compile(

Loss='categorical_crossentropy',

Optimizer=SGD(clipnorm=10000, clipvalue=10000),

Metrics=['accuracy'])

There is also an extra pit, and many optimizers use clipping to avoid the gradient becoming too small or too large. Tailoring requires a comparison of private values, which is a somewhat expensive operation under encryption settings, so our goal is to avoid using clipping (in the above code, we added boundaries).

Network Layer

When it comes to comparison, ReLU and the largest pooling layer also have this problem. CryptoNet replaces the former with a squared function, replacing the latter with an average pooling, and SecureML implements a ReLU-like activation function (however, this adds complexity, and this article intends to avoid this in order to keep it simple). Therefore, we use the high-order sigmoid activation function and the average pooling layer here. Note that the average pooling also uses division, but this time the denominator is a public value, so the division is simply a reciprocal of the public value, followed by a multiplication.

Feature_layers = [

Conv2D(32, (3, 3), padding='same', input_shape=(28, 28, 1)),

Activation('sigmoid'),

Conv2D(32, (3, 3), padding='same'),

Activation('sigmoid'),

AveragePooling2D(pool_size=(2,2)),

Dropout (.25),

Flatten()

]

Classification_layers = [

Dense (128),

Activation('sigmoid'),

Dropout (.50),

Dense (NUM_CLASSES),

Activation('softmax')

]

Model = Sequential(feature_layers + classification_layers)

Simulations show that this change requires us to increase the number of epochs and slow down the training accordingly. Other options for learning rate or momentum may improve this.

Model.fit(

X_train, y_train,

Epochs=15,

Batch_size=32,

Verbose=1,

Validation_data=(x_test, y_test))

The rest of the layers are handled very well. Dropout and flatten do not care whether it is encrypted or non-encrypted. The dense layer and convolution layer are matrix dot products, which only require basic operations.

Softmax and loss function

Under the encryption setting, the final softmax layer also brings complexity to the training, because we need to perform an exponential operation with a private value index and a normalization based on private denominator division.

Although both are possible, we have chosen a simpler approach that allows one server to expose the likelihood of a predictive classification for each training sample, which then calculates the results based on the exposure values. This of course leads to a privacy breach, which may or may not form an acceptable risk.

A heuristic improvement is to transform a similar vector before exposing any value, thereby hiding which vector corresponds to which vector. However, this may not have any effect. For example, “health†often means a tight distribution, while “disease†often means a distribution of stretch.

Another solution is to introduce a third server that specializes in such small calculations. Any other information about the training data will be invisible to the server, so the label and sample data cannot be correlated. Although there is still some information leaking in this way, this number is difficult to reason.

Finally, we can replace such a one-to-many method with a one-to-one method, such as using sigmoid. As mentioned earlier, this allows us to calculate the predictions completely without decryption. However, we still need to calculate the loss, we may also consider using a different loss function.

Note that when using the trained network for prediction, the problems mentioned here do not exist, because there is no loss to calculate, and the server can directly skip the softmax layer, let the predicted receiver calculate the corresponding value: for the receiver, this is just a question of how to interpret the value.

Migration Learning

So far, it seems that we have been able to actually train the model as it is and get good results. However, according to CNN's practice, we can use migration learning to significantly speed up the training process; in fact, to a certain extent, "very few people train their own convolutional networks from scratch because they don't have enough data," "It is always recommended to use migration learning in practice", which is a well-known fact.

In our setting here, the specific application of migration learning may be that training is divided into two phases: a pre-training phase using non-sensitive public data and a tuning phase using sensitive private data. For example, in the case of detecting skin cancer, the researcher may choose to pre-train on a public photo collection and then request volunteers to provide additional photos to improve the model.

In addition to the difference in cardinality, the subjects of the two data sets may also be different, because CNN has a tendency to first decompose the body into meaningful sub-parts, identifying which parts are what can be migrated. In other words, this technique is powerful enough that pre-training can be performed on different types of images.

Going back to our specific character recognition use case, we can make 0-4 a "public" image and 5-9 as a "private" image. Instead, let az be a "public" image, and 0-9 as a "private image" would look unreasonable.

Pre-training on public data sets

In addition to the overhead of avoiding cryptographic data training on public datasets, pre-training on public datasets allows us to use more advanced optimizers. For example, here we can go back and use the Adam optimizer to train the image to speed up the training. In particular, we can reduce the number of epoch required.

(x_train, y_train), (x_test, y_test) = public_dataset

Model.compile(

Loss='categorical_crossentropy',

Optimizer='adam',

Metrics=['accuracy'])

Model.fit(

X_train, y_train,

Epochs=1,

Batch_size=32,

Verbose=1,

Validation_data=(x_test, y_test))

Once we are satisfied with the results of the pre-training, the server can directly share the model parameters and start training private data sets.

Tuning on private data sets

When we started the cryptographic training, the parameters of the model were “on the wayâ€, so we can expect that there is no need for so many epochs. As mentioned earlier, migration learning has another advantage. Identifying subcomponents tends to occur at the bottom of the network and in some cases may be used as is. Therefore, we now freeze the parameters of the feature layer and concentrate on training the classification layer.

For layer in feature_layers:

Layer.trainable = False

However, we still need to pass all the private training samples forward through these layers; the only difference is that we skip these layers in the backpropagation step, so the parameters we need to train are reduced.

The next training is the same as before, except that the lower learning rate is now used:

(x_train, y_train), (x_test, y_test) = private_dataset

Model.compile(

Loss='categorical_crossentropy',

Optimizer=SGD(clipnorm=10000, clipvalue=10000, lr=0.1, momentum=0.0),

Metrics=['accuracy'])

Model.fit(

X_train, y_train,

Epochs=5,

Batch_size=32,

Verbose=1,

Validation_data=(x_test, y_test))

In the end, we reduced the epoch number from 25 to 5.

Pretreatment

There are a handful of pre-processing optimizations that can be applied, but we will not further optimize them here.

The first optimization is to transfer the calculation of the frozen layer to the input provider so that the shared with the server will be the flat layer instead of the pixels of the image. In this case, these layers perform feature extraction, potentially allowing us to use more powerful layers. However, if we want to keep the model proprietary, this adds significant complexity because the parameters need to be distributed to the client in some form.

Another typical method of speeding up training is to first apply dimensionality reduction techniques such as principal component analysis. The BSS+'17 encryption setting uses this method.

Adaptation Protocol

After looking at the model, let's look at the protocol: Again, as we're about to see, understanding what we need to do can help speed things up.

In particular, many calculations can be transferred to the cryptographic provider, the original material generated by the cryptographic provider being independent of the private input, and somewhat even independent of the model. Therefore, its calculations can be completed in large quantities at a convenient time.

Recall that it was necessary to optimize round complexity and communication complexity at the same time, and the extensions proposed here are often designed to optimize both, but at the cost of additional local calculations. Therefore, actual experiments are needed to verify their benefits in specific situations.

Dropout

Starting with the simplest type of network layer, we noticed that nothing specific to security computing happened at this level, this layer just made sure that the two servers agreed to discard which values in each training iteration. This can be achieved by directly agreeing to a seed value.

Average pooling

The average pooled forward propagation requires only one accumulation and the subsequent division based on the public denominator. Therefore, it can be achieved by multiplying by a public value: since the denominator is public, we can easily find its reciprocal and then directly multiply and truncate. Similarly, backpropagation is nothing more than scaling, so the propagation in both directions is entirely local.

Dense layer

Both the forward propagation and the back propagation of the dense layer require a dot product operation, which can of course be achieved by classical multiplication and addition. If we want to calculate the dot product dot(x, y) for matrices x and y of shape (m, k) and (k, n), then this would require m * n * k multiplications, meaning we need communication The same number of masked values. Although these can be sent concurrently, we only need one round. If we can use another pre-processed triple, we can reduce the communication cost by an order of magnitude.

For example, the second dense layer of our model computes the dot product of the two matrices (32, 128) and (128, 5). Using the typical method, each batch needs to send a 32 * 5 * 128 == 22400 masked value, but using the preprocessed triples described below, we only need to send 32 * 128 + 5 * 128 == The value after the 4736 mask has almost a 5x improvement. The effect of the first dense layer is even better, with an improvement of about 25 times.

The trick is to ensure that the mask for each private value in the matrix is sent only once. To achieve this, we need a triple (a, b, c), where a and b are randomly shaped matrices, and c satisfies c == dot(a, b).

Def generate_dot_triple(x_shape, y_shape):

a = sample_random_tensor(x_shape)

b = sample_random_tensor(y_shape)

c = np.dot(a, b) % Q

return PrivateTensor(a), PrivateTensor(b), PrivateTensor(c)

Given such a triple, we can instead communicate the values of alpha = x - a and beta = y - b, and then get dot(x, y) by local calculation.

class PrivateTensor:

...

Def dot(x, y):

If type(y) is PublicTensor:

Shares0 = x.shares0.dot(y.values) % Q

Shares1 = x.shares1.dot(y.values) % Q

return PrivateTensor(None, shares0, shares1)

If type(y) is PrivateTensor:

a, b, a_dot_b = generate_dot_triple(x.shape, y.shape)

Alpha = (x - a).reconstruct()

Beta = (y - b).reconstruct()

Return alpha.dot(beta) + \

Alpha.dot(b) + \

A.dot(beta) + \

A_dot_b

The security of using this triple is dependent on the security of the triple multiplication: the masked value of the communication perfectly hides the values of x and y, and c is a separate new shared value, which ensures As a result, no information about its composition can be disclosed.

Note that SecureML uses such triples, and SecureML also provides the technology to generate triples by the server without the help of an encryption provider.

Convolution

Like dense layers, convolution can be thought of as a series of scalar multiplications or matrix multiplications, although the latter first needs to extend the tensor of the training samples to a matrix with many redundancy. Not surprisingly, both lead to increased communication costs, which can be improved by introducing another triple.

For example, the first convolution layer uses 32 cores of shape (3, 3, 1) to map tensors of shape (m, 28, 28, 1) to (m, 28, 28, 32). Tensor (regardless of the offset vector). For the batch size m == 32, if we only use scalar multiplication, this means 7,225,344 communication elements, and if we use matrix multiplication, it is 226,080 communication elements. However, since only a total of (32*28*28) + (32*3*3) == 25,376 private values are involved (the offset vectors are also not calculated because they only need to be added), we see that there are about 9 times The extra overhead. In other words, each private value is masked and sent several times. Based on a new triple, we can eliminate this overhead and save on communication costs: for 64-bit elements, this means that the cost per bundle is 200KB instead of the corresponding 1.7MB and 55MB.

The triples (a, b, c) we need here are similar to those used in the dot product, a and b have the shape of the matching input, i.e., (m, 28, 28, 1) and (32, 3, 3, 1), and c matches the output shape (m, 28, 28, 32).

Sigmoid activation

As we have done before, we can use a 9-term polynomial to approximate the sigmoid activation function to a sufficient degree of precision. Calculating the value of this polynomial for the private value x requires computing a series of powers of x, which can of course be done by a series of multiplications - but this means many rounds and a corresponding amount of communication.

Instead, we can use a new triple, which allows us to calculate all the required powers in one round. The length of these "triples" is not fixed and is equal to the highest index. For example, the corresponding triplet contains independent sharing of a and a**2, while the corresponding cubic triple contains a, a**2. A**3 independent sharing.

Once we have the power values of these x, the calculus polynomial with the public coefficients is just the local weighted sum. The security of this calculation is also derived from the fact that all the powers in the triple are independently shared.

Def pol_public(x, coeffs, triple):

Powers = pows(x, triple)

Return sum( xe * ce for xe, ce in zip(powers, coeffs) )

As before, we will encounter pits with fixed-point precision, that is, the higher precision of the power requires more space: x**n has n times x precision, and we want to make sure it is not in Q The module overflows so that we cannot decode it correctly. We can temporarily switch over when we calculate the power by introducing a domain P that is large enough, at the cost of an additional two rounds of communication.

Experiments in practice will show whether it is better to keep Q using more multiplication rounds, or to pay for switching large numbers and arithmetic. In particular, the former looks better for low-order polynomials.

Proof of Concept

There is a

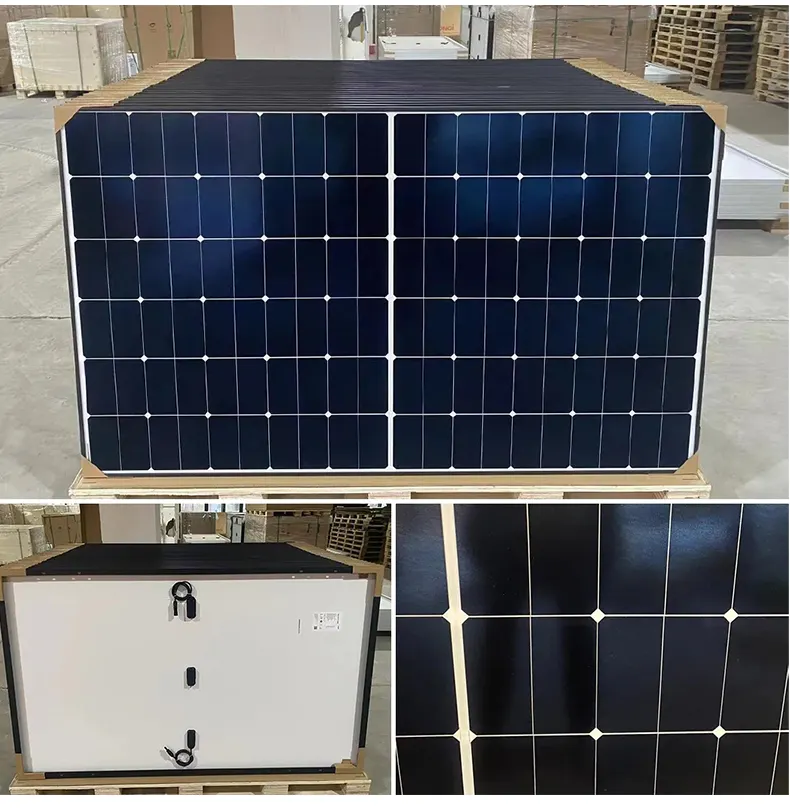

Customized Solar Panel, 100watt solar panel,200watt solar panel, big solar panel, high efficiency high quality solar modules

different power customized and OEM logo customized solar panel

Customized solar panel data

solar cell type

mono crystalline half cut cell

power range

50watt to max 700watt

size and weight

different size and different weight if the power is different

solar panel type

monofacial or bifacial

solar panel color

sliver or black

Product details and pic

Customized Solar Panel,Noncrystalline Solar Panel Module,Cheap Price Pv Solar Module,Solar Photovoltaic Pv Panel

PLIER(Suzhou) Photovoltaic Technology Co., Ltd. , https://www.pliersolar.com